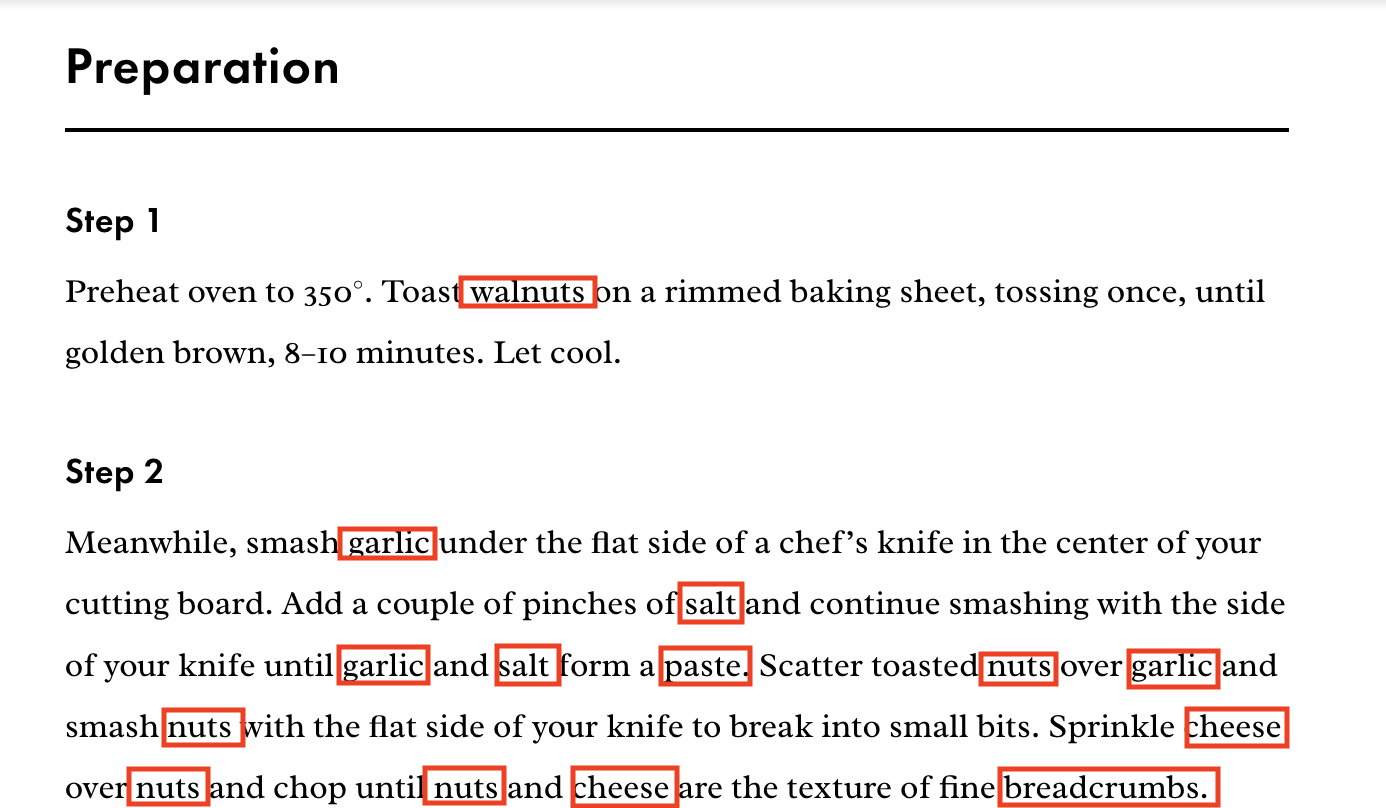

Computers are good at processing large amounts of information, but bad at intuiting what that information actually is. For an ongoing research project looking at mentions of food in online media, we’re trying to help computers get better at recognizing entities in unstructured text. Given the text of an arbitrary webpage or social media post, how successfully can we get a computer to pull out all the words that are food?

How do we recognize foods in texts? source: Bon Appetit

How do we recognize foods in texts? source: Bon Appetit

The term “named entity recognition” describes a set of algorithms designed to adapt computational language models to understanding unique classes of information. As a subset of natural language processing (NLP), the goal of named entity recognition is to assign unique values to words and attribute some meaning to them. This contrasts with most other parts of the NLP pipeline, which assess generalized, syntax-related structures like part of speech and dependencies. The focus of this blog will be on how named entity recognition and adjacent strategies can form structured categories for masses of unstructured data sourced from food-related content. Within computational linguistics, a variety of approaches have formed to optimize the extraction of this sort of information.

Technical Approaches to Named Entity Recognition

Some approaches to named entity recognition do not engage much with machine learning environments. Researchers (generally speaking) have three different types of named entity recognition at their disposal:

The first, referred to as the “dictionary-based approach,” catalogs as many known named entities as possible within categories. This approach makes intuitive sense since human recall of “named entities” might engage the same sub-categorization process. However, from a computational perspective, dictionary-based recognition can incur a large memory overhead. Additionally, this approach inefficiently allocates this memory; infrequently used words or archaic terms take up space without offering a large use value. More importantly, the dictionary approach cannot incorporate new words into its lexicon, as it has no means of categorizing available words into existing datasets. Here, intuition breaks apart since the human brain can do this by processing new information about unfamiliar entities. Even if this hurdle were overcome, the dictionary might struggle to categorize words with a variety of applications in different “subcategories.” Thus, the dictionary-based approach does not offer suitable performance for our task at hand.

The second, known as the “rule-based approach,” employs linguistic patterns and context in order to determine classification of words. Consider the sentence “put the pot of pasta on the stove.” If we are looking for the food term, we can use linguistic features to get there. We can see the entity we are searching for, “pasta,” and within English syntax we can locate this as a descriptor of the direct object of the sentence, “pot.” Within a computational context, the source for rule-based named entity recognition comes in tagging the dependencies of individual words within a sentence; it utilizes the dependency structure, as opposed to semantic proximity, to determine the likelihood of a word appearing as a named entity. This approach still requires the use of machine learning, since neural networks must model sentences into a list of dependencies, and a training set that describes the named entity and its location within the dependency structure must be created for this approach to succeed. However, the reliance on linguistic rules can lessen the complexity of the algorithm, as well as improve accuracy of findings regardless of irregularly named entities, such as those with two or three words. This approach sees benefits within the silo we are working in. Research of food recognition has employed a rule-based approach to high rates of precision and recall. Rule-based approaches show some promise for our task, and we’ll return to them later in this discussion.

Finally, the “machine-learning-based approach” relies purely on the proximity of words to one another to predict named entities. Using a labeled training set of data, the machine attempts to classify entities, and then checks its work. Over time, the system should become capable of determining food entities, independent of anything other than the string of text fed to it. This is computationally heavy, but a powerful means of inculcating independent machine recognition. The benefits to this independence are immense; it means that over time, irregular patterns, and unfamiliar terminology can be incorporated with greater success and reflection of reality. As such, most research around named entity recognition has coalesced around this approach, taking insights where needed from the prior two.

One approach to entity classification via machine learning begins with the word2vec - high dimensional vectors representing word meanings and use. In our context, it is helpful to understand word2vec as a prerequisite to named entity recognition techniques (as opposed to its own, freestanding method). Broadly speaking, word2vec utilizes the machine learning approach described above to categorize words based on their proximity, and then mutates auxiliary data structures to reflect this. Some implementations, like skip-grams and bag-of-words, do this very directly through enumeration of words within a given proximity. However, I wish to focus on a word embedding implementation. Word embedding quantifies the utility of a given word through representing the word as an n-dimensional vector. At first, these numbers are random, and produce little predictive value; however, through continual training, a model tweaks the values of the word based on its contextual placement. In time, the set represents the proximity of the word to a cluster of adjacent vocabulary (Altosaar). Word embedding is the bedrock of applied named entity recognition. For a machine to understand the use value of a word, we must first make the linguistic assumption that words with similar applications (even if not synonymic) co-occur more frequently than otherwise. Word embedding condenses this process into numerical representations, similar in nature to hashing in cryptography. Embeddings are also, then, predictive in nature. By assigning clusters of relative proximity, we can utilize word embeddings to guess consecutive words in a string. Learn more in this helpful write from Carnegie Mellon.

Recognizing Food Entities

With these approaches defined, how can we incorporate lessons from their use to create an effective named entity recognition model for food? To answer this, we can look at some strategies employed by relevant projects in the existing academic literature.

The first of these, produced by scholars of the Complex Systems Lab based in New Delhi, combines the rule- and machine-learning- based models to create a graph which reflects the recognition and tagging of ingredients, the processes employed to mutate the ingredients, and the equipment used to facilitate the processes. First, the model iterates over a set of labeled ingredient data. This data incorporates a variety of attributes, such as quantity, temperature and state of ingredient (e.g. chopped, freeze-dried). With this information, parts of speech and dependencies are then flagged to create relationships to processes and utensils. Finally, an NER tagger is trained to deploy flags on word tokens used to describe processes and utensils, like “mix” and “whisk.” The result of this collection is a repository of inter-connected entities, bound together by relationships inferred from their parts of speech in shared sentences and dependencies on one another.

This approach is at the scale of a single recipe, as opposed to a project that might analyze individual entities over a swath of recipes. This might come as the greatest limitation of this research. An approach based on modeling the relationships within a single entity can be difficult to use larger datasets, as processes and utensils can vary greatly based on application, and do not provide too much valuable information about which foods are used and how they are described. Additionally, the emphasis on the food-making process obscures our focus on the food created, as opposed to its components. Because of this, the object of this research might not seem immediately pertinent to our interests.

However, there are many insights to garner from the methodologies employed. First, the use of some rules-based strategies within the implementation of the machine learning model can be a great asset to our understanding of food entities. Regardless of the desired outcome of our project, using dependency structures to inform how we process food entities can be of use. With our present work, more support is needed to facilitate our model’s recognition of multi-word entities. The use of dependencies can help tag these words as joint entities. Further, the use of multi-faceted ingredient data, such as state and temperature, can add nuance to the sort of final meals described.

The second approach utilizes a rule-based approach in order to flag entities as food classifiers. Developed by Macedonian and Slovenian computer scientists, “FoodIE” utilizes a basic NER model at first but iterates over the training data to develop predictive models based on parts of speech and dependency. Through parti of speech and dependency analytics, the semantic classifications assigned to named entities are more nuanced than other models. Advanced semantic tagging describes each item in the sentence, along with possible classes that the item relates to (for instance, “beef” is classified under the subfamily of “bovines”, and “soup” has additional classifications of “wave” and “cloudy). FoodIE is a highly successful model, with 97.6% and 94.3% precision and recall rates. The model can also distinguish between dishes with more than one word, even in the case of compound nouns like “fruit salad.” This suggests that the more a model can incorporate insights from the context of the text, the more granular its observations will be.

From these case studies, as well as the survey of literature on named entity recognition, two pertinent insights should be incorporated into our research. First, our model needs to better identify multi-word entities. Thus far, the model atomizes each word and does not consider context when flagging words. One tool to adopt from the second approach is dependency checks. If our model discovers a classifier belonging to food (which it does with high recall), we must build support for it to consider incorporating surrounding words into the final entity. The model is already capable of this for other tags developed in the broader NER model but does not have the power to do so for our tags. Second, we ought to consider lemmatizing our entities. I have noticed that our early iterations of the model struggle to comprehend words used in different contexts with hanging characters (for instance, our model currently tags egg, but not eggs). This is a simple fix – SpaCy describes its pipeline as lemmatizing succeeding named entity recognition, but this is not fixed, so the order of processing can be inverted. We might want to use this inversion more broadly by scanning for entities first, then running a set of algorithms to determine the belongingness of adjacent words in dependency or part of speech. For lemmatization, however, that must precede NER.

Conclusion

Generally, incorporating named entity recognition techniques will prove essential on our own quest to map cultural signifiers attached to food. The choices we make in how we recognize food-based entities as such varies greatly depending on our use case. A survey of relevant literature grounds our research by providing leads to solve problems along the development process as well as spark new ideas for manipulating existing datasets. No approach that we choose will yield perfect results, so it is important to know how to manage our margin of error to fit the task at hand. Ultimately, we hope to make decisions that harmonize the flow of information at each technical step of our model’s pipeline. Once it is performing well, we look forward to sharing this model.